Today, Google announced the development of Imagen Video, a text-to-video AI mode capable of producing 1280×768 videos at 24 frames per second from a written prompt. Currently, it’s in a research phase, but its appearance five months after Google Imagen points to the rapid development of video synthesis models.

Only six months after the launch of OpenAI’s DALLE-2 text-to-image generator, progress in the field of AI diffusion models has been heating up rapidly. Google’s Imagen Video announcement comes less than a week after Meta unveiled its text-to-video AI tool, Make-A-Video.

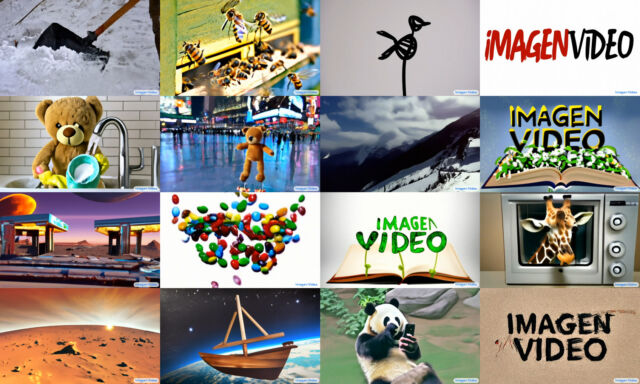

According to Google’s research paper, Imagen Video includes several notable stylistic abilities, such as generating videos based on the work of famous painters (the paintings of Vincent van Gogh, for example), generating 3D rotating objects while preserving object structure, and rendering text in a variety of animation styles. Google is hopeful that general-purpose video synthesis models can “significantly decrease the difficulty of high-quality content generation.”

The key to Imagen Video’s abilities is a “cascade” of seven diffusion models that transform the initial text prompt (such as “a bear washing the dishes”) into a low-resolution video (16 frames, 24×48 pixels, at 3 fps), then upscales it into progressively higher resolutions with higher frame rates with each step. The final output video is 5.3 seconds long.

Video examples presented on the Imagen Video website range from the mundane (“Melting ice cream dripping down the cone”) to the more fantastic (“Flying through an intense battle between pirate ships on a stormy ocean.”) They contain obvious artifacts, but show more fluidity and detail than earlier text-to-image models such as CogVideo that debuted five months ago.

Another Google-adjacent text-to-video model also officially debuted today. Called Phenaki, it can create longer videos from detailed prompts. That, along with DreamFusion, which can create 3D models from text prompts, shows that competitive development on diffusion models continues rapidly, with the number of AI papers on arXiv growing exponentially at a rate that makes it difficult for some researchers to keep up with the latest developments.

Training data for Google Imagen Video comes from the publicly available LAION-400M image-text dataset and “14 million video-text pairs and 60 million image-text pairs,” according to Google. As a result, it has been trained on “problematic data” filtered by Google but still can contain sexually explicit and violent content —as well as social stereotypes and cultural biases. The firm is also concerned its tool may be used “to generate fake, hateful, explicit or harmful content.”

As a result, it’s unlikely we’ll see a public release any time soon: “We have decided not to release the Imagen Video model or its source code until these concerns are mitigated,” says Google.