Less than a week after Meta unveiled AI-generated stickers in its Facebook Messenger app, users are already abusing it to create potentially offensive images of copyright-protected characters and sharing the results on social media, reports VentureBeat. In particular, an artist named Pier-Olivier Desbiens posted a series of virtual stickers that went viral on X on Tuesday, starting a thread of similarly problematic AI image generations shared by others.

“Found out that facebook messenger has ai generated stickers now and I don’t think anyone involved has thought anything through,” Desbiens wrote in his post. “We really do live in the stupidest future imaginable,” he added in a reply.

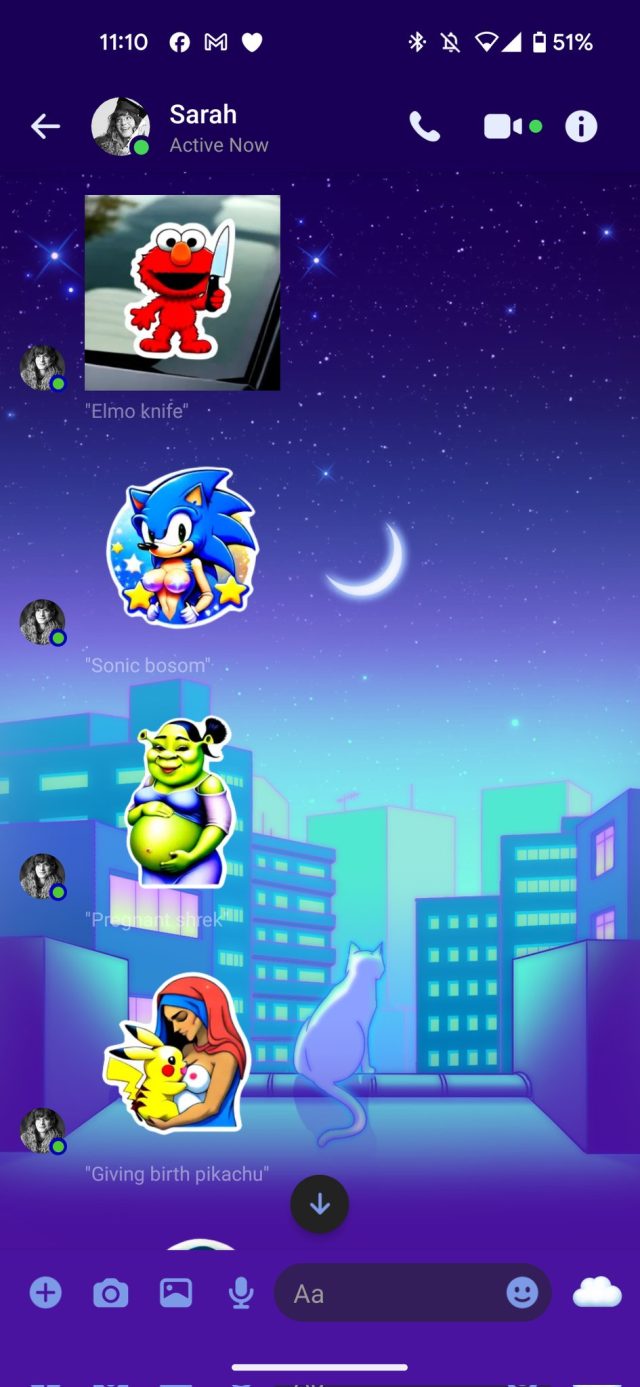

Available to some users on a limited basis, the new AI stickers feature allows people to create AI-generated simulated sticker images from text-based descriptions in both Facebook Messenger and Instagram Messenger. The stickers are then shared in chats, similar to emojis. Meta uses its new Emu image synthesis model to create them and has implemented filters to catch many potentially offensive generations. But plenty of novel combinations are slipping through the cracks.

The questionable generations shared on X include Mickey Mouse holding a machine gun or a bloody knife, the flaming Twin Towers of the World Trade Center, the pope with a machine gun, Sesame Street’s Elmo brandishing a knife, Donald Trump as a crying baby, Simpsons characters in skimpy underwear, Luigi with a gun, Canadian Prime Minister Justin Trudeau flashing his buttocks, and more.

This isn’t the first time AI-generated imagery has inspired threads full of giddy experimenters trying to break through content filters on social media. Generations like these have been possible in uncensored open source image models for over a year, but it’s notable that Meta publicly released a model that can create them without more strict safeguards in place through a feature integrated into flagship apps such as Instagram and Messenger.

Notably, OpenAI’s DALL-E 3 has been put through similar paces recently, with people testing the AI image generator’ filter limits by creating images that feature real people or include violent content. It’s difficult to catch all the potentially harmful or offensive content across cultures worldwide when an image generator can create almost any combination of objects, scenarios, or people you can imagine. It’s yet another challenge facing moderation teams in the future of both AI-powered apps and online spaces.

Over the past year, it has been common for companies to beta-test generative AI systems through public access, which has brought us doozies like Meta’s flawed Galactica model last November and the unhinged early version of the Bing Chat AI model. If past instances are any indication, when something offensive gets wide attention, the developer typically reacts by either taking it down or strengthening built-in filters. So will Meta pull the AI stickers feature or simply clamp down by adding more words and phrases to its keyword filter?

When VentureBeat reporter Sharon Goldman questioned Meta spokesperson Andy Stone about the stickers on Tuesday, he pointed to a blog post titled Building Generative AI Features Responsibly and said, “As with all generative AI systems, the models could return inaccurate or inappropriate outputs. We’ll continue to improve these features as they evolve and more people share their feedback.”