Microsoft brought DirectStorage to Windows PCs this week. The API promises faster load times and more detailed graphics by letting game developers make apps that load graphical data from the SSD directly to the GPU. Now, Nvidia and IBM have created a similar SSD/GPU technology, but they are aiming it at the massive data sets in data centers.

Instead of targeting console or PC gaming like DirectStorage, Big accelerator Memory (BaM) is meant to provide data centers quick access to vast amounts of data in GPU-intensive applications, like machine-learning training, analytics, and high-performance computing, according to a research paper spotted by The Register this week. Entitled “BaM: A Case for Enabling Fine-grain High Throughput GPU-Orchestrated Access to Storage” (PDF), the paper by researchers at Nvidia, IBM, and a few US universities proposes a more efficient way to run next-generation applications in data centers with massive computing power and memory bandwidth.

BaM also differs from DirectStorage in that the creators of the system architecture plan to make it open source.

The paper says that while CPU-orchestrated storage data access is suitable for “classic” GPU applications, such as dense neural network training with “predefined, regular, dense” data access patterns, it causes too much “CPU-GPU synchronization overhead and/or I/O traffic amplification.” That makes it less suitable for next-gen applications that make use of graph and data analytics, recommender systems, graph neural networks, and other “fine-grain data-dependent access patterns,” the authors write.

Like DirectStorage, BaM works alongside an NVMe SSD. According to the paper, BaM “mitigates I/O traffic amplification by enabling the GPU threads to read or write small amounts of data on-demand, as determined by the computer.”

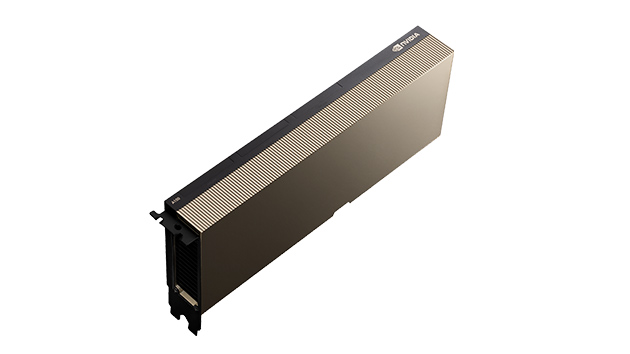

More specifically, BaM uses a GPU’s onboard memory, which is software-managed cache, plus a GPU thread software library. The threads receive data from the SSD and move it with the help of a custom Linux kernel driver. Researchers conducted testing on a prototype system with an Nvidia A100 40GB PCIe GPU, two AMD EPYC 7702 CPUs with 64 cores each, and 1TB of DDR4-3200 memory. The system runs Ubuntu 20.04 LTS.

The authors noted that even a “consumer-grade” SSD could support BaM with app performance that is “competitive against a much more expensive DRAM-only solution.”