Greetings, dear readers, and congratulations—we’ve reached the end of our four-part series on how Ars Technica is hosted in the cloud, and it has been a journey. We’ve gone through our infrastructure, our application stack, and our CI/CD strategy (that’s “continuous integration and continuous deployment”—the process by which we manage and maintain our site’s code).

Now, to wrap things up, we have a bit of a grab bag of topics to go through. In this final part, we’ll discuss some leftover configuration details I didn’t get a chance to dive into in earlier parts—including how our battle-tested liveblogging system works (it’s surprisingly simple, and yet it has withstood millions of readers hammering at it during Apple events). We’ll also peek at how we handle authoritative DNS.

Finally, we’ll close on something that I’ve been wanting to look at for a while: AWS’s cloud-based 64-bit ARM service offerings. How much of our infrastructure could we shift over onto ARM64-based systems, how much work will that be, and what might the long-term benefits be, both in terms of performance and costs?

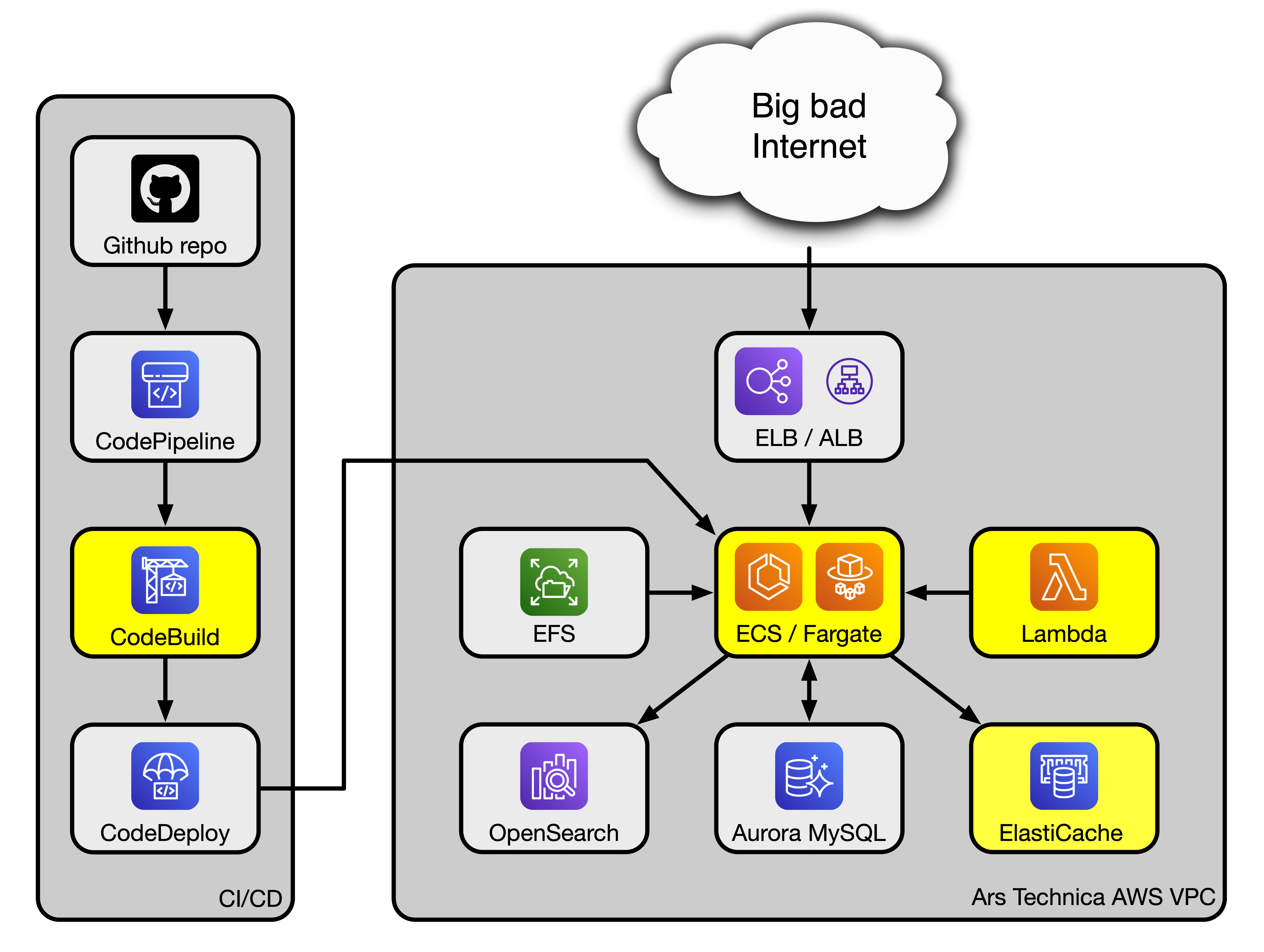

But first, because I know we have readers who like to skip ahead, let’s re-introduce our block diagram and make sure we’re all caught up with what we’re doing today:

The recap: What we’ve got

So, recapping: Ars runs on WordPress for the front page, a smaller WordPress/WooCommerce instance for the merch store, and XenForo for the OpenForum. All of these applications live as containers in ECS tasks (where “task” in this case is functionally equivalent to a Docker host, containing a number of services). Those tasks are invoked and killed as needed to scale the site up and down in response to the current amount of visitor traffic. Various other components of the stack contribute to keeping the site operational (like Aurora, which provides MySQL databases to the site, or Lambda, which we use to kick off WordPress scheduled tasks, among other things).

On the left side of the diagram, we have our CI/CD stack. The code that makes Ars work—which for us includes things like our WordPress PHP files, both core and plugin—lives in a private Github repo under version control. When we need to change our code (like if there’s a WordPress update), we modify the source in the Github repo and then, using a whole set of tools, we push those changes out into the production web environment, and new tasks are spun up containing those changes. (As with the software stack, it’s a little more complicated than that—consult part three for a more granular description of the process!)

Eagle-eyed readers might notice there’s something different about the diagram above: A few of the services are highlighted in yellow. Well-spotted—those are services that we might be able to switch over to run on ARM64 architecture, and we’ll examine that near the end of this article.

Colors aside, there are also several things missing from that diagram. In attempting to keep it as high-level as possible while still being useful, I omitted a whole mess of more basic infrastructure components—and one of those components is DNS. It’s one of those things we can’t operate without, so let’s jump in there and talk about it.