On Tuesday, OpenAI published a new research paper detailing a technique that uses its GPT-4 language model to write explanations for the behavior of neurons in its older GPT-2 model, albeit imperfectly. It’s a step forward for “interpretability,” which is a field of AI that seeks to explain why neural networks create the outputs they do.

While large language models (LLMs) are conquering the tech world, AI researchers still don’t know a lot about their functionality and capabilities under the hood. In the first sentence of OpenAI’s paper, the authors write, “Language models have become more capable and more widely deployed, but we do not understand how they work.”

For outsiders, that likely sounds like a stunning admission from a company that not only depends on revenue from LLMs but also hopes to accelerate them to beyond-human levels of reasoning ability.

But this property of “not knowing” exactly how a neural network’s individual neurons work together to produce its outputs has a well-known name: the black box. You feed the network inputs (like a question), and you get outputs (like an answer), but whatever happens in between (inside the “black box”) is a mystery.

In an attempt to peek inside the black box, researchers at OpenAI utilized its GPT-4 language model to generate and evaluate natural language explanations for the behavior of neurons in a vastly less complex language model, such as GPT-2. Ideally, having an interpretable AI model would help contribute to the broader goal of what some people call “AI alignment,” ensuring that AI systems behave as intended and reflect human values. And by automating the interpretation process, OpenAI seeks to overcome the limitations of traditional manual human inspection, which is not scalable for larger neural networks with billions of parameters.

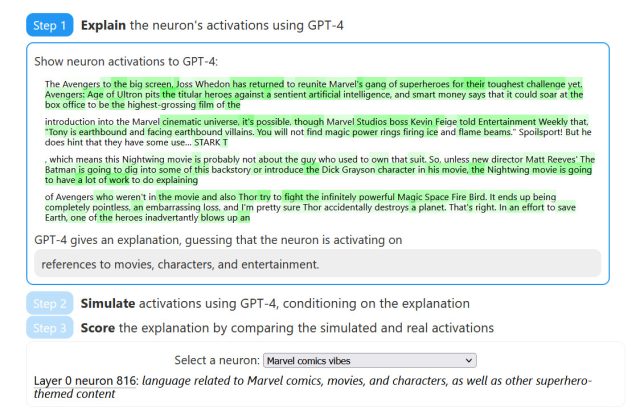

OpenAI’s technique “seeks to explain what patterns in text cause a neuron to activate.” Its methodology consists of three steps:

- Explain the neuron’s activations using GPT-4

- Simulate neuron activation behavior using GPT-4

- Compare the simulated activations with real activations.

To understand how OpenAI’s method works, you need to know a few terms: neuron, circuit, and attention head. In a neural network, a neuron is like a tiny decision-making unit that takes in information, processes it, and produces an output, just like a tiny brain cell making a decision based on the signals it receives. A circuit in a neural network is like a network of interconnected neurons that work together, passing information and making decisions collectively, similar to a group of people collaborating and communicating to solve a problem. And an attention head is like a spotlight that helps a language model pay closer attention to specific words or parts of a sentence, allowing it to better understand and capture important information while processing text.

By identifying specific neurons and attention heads within the model that need to be interpreted, GPT-4 creates human-readable explanations for the function or role of these components. It also generates an explanation score, which OpenAI calls “a measure of a language model’s ability to compress and reconstruct neuron activations using natural language.” The researchers hope that the quantifiable nature of the scoring system will allow measurable progress toward making neural network computations understandable to humans.

So how well does it work? Right now, not that great. During testing, OpenAI pitted its technique against a human contractor that performed similar evaluations manually, and they found that both GPT-4 and the human contractor “scored poorly in absolute terms,” meaning that interpreting neurons is difficult.

One explanation put forth by OpenAI for this failure is that neurons may be “polysemantic,” which means that the typical neuron in the context of the study may exhibit multiple meanings or be associated with multiple concepts. In a section on limitations, OpenAI researchers discuss both polysemantic neurons and also “alien features” as limitations of their method:

Furthermore, language models may represent alien concepts that humans don’t have words for. This could happen because language models care about different things, e.g. statistical constructs useful for next-token prediction tasks, or because the model has discovered natural abstractions that humans have yet to discover, e.g. some family of analogous concepts in disparate domains.

Other limitations include being compute-intensive and only providing short natural language explanations. But OpenAI researchers are still optimistic that they have created a framework for both machine-meditated interpretability and the quantifiable means of measuring improvements in interpretability as they improve their techniques in the future. As AI models become more advanced, OpenAI researchers hope that the quality of the generated explanations will improve, offering better insights into the internal workings of these complex systems.

OpenAI has published its research paper on an interactive website that contains example breakdowns of each step, showing highlighted portions of the text and how they correspond to certain neurons. Additionally. OpenAI has provided “Automated interpretability” code and its GPT-2 XL neurons and explanations datasets on GitHub.

If they ever figure out exactly why ChatGPT makes things up, all of the effort will be well worth it.